“Alexa, can you be hacked?” “Sorry, I’m not sure about that.”

Wherever technology pervades, hackers won’t be far behind, which means that your Alexa speaker – be it an Echo Dot or Echo Show – is already on the radar of the bad guys.

With Alexa constantly listening for commands, smart speakers make perfect bugging devices – if the bad guys can circumvent the security placed on them. Cue the barks of righteous indignation from I-told-you-sos everywhere who knew inviting Amazon into your home was a bad idea.

It was back in August 2017, when this high profile hack first came to light. Mark Barnes of MWR Labs got busy with his soldering iron, did what the average person would struggle to even think of and pulled off the kind of impressive proof of concept which could be easily refined and sold on to those with far less technical ability.

Nefarious ends could then run anywhere between simple eavesdropping to the theft of a user’s Amazon account. Nasty stuff.

The alarming part is that Barnes’s isn’t the only kind of hack a person could perform to make your Amazon Echo do things you’d rather it didn’t.

So how concerned should owners of an Alexa be? Well, here’s what you need to watch out for and how to stop it happening to you.

Voice squatting

One of the biggest security risks around Alexa right now is fake skills – also known as Voice Squatting. Researchers at Indiana University were able to register skills that sounded like popular incumbents, using accents and mispronunciations to illicit unwitting installations.

The study created Alexa skills and Google Actions that hoovered up slight nuances in people’s commands. In the example they created skills that played on Capital One skill (a banking app), to install a bogus app for “Alexa, start Capital Won” or “Capital One Please”.

Amazon has closed an exploit that skills could use to jam listening via your smart speaker open, which would effectively turn it into a listening device.

However, it’s still a good job to monitor the skills you have installed via the Alexa app. With some skills looking for payment information and boasting the ability to hook up with other services, and the low barrier for installation of skills, this is a problem that might not go away too quickly.

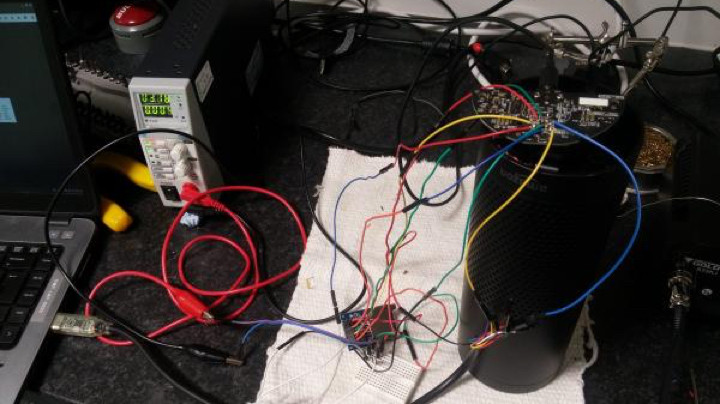

The Barnes Hack: Turning the Echo into a bugging device

Barnes had a good old dig at the Echo and discovered that you could remove the rubber base of the first edition models to reveal some access points presumably used for bug testing back in the day.

He wired an SD card reader to one of these terminals and then proceeded to root the mutha with whatever software additions desired. To turn Echo into a listening device, he accessed its always-on microphone and directed everything it heard to a remote computer terminal elsewhere. Hey presto, a smart home bugging device.

The small print is that the rubber bottom and external access connection is only present on the first edition Echos from way back in 2015 and 2016. Later models don’t have that feature.

Barnes’s Echo might have looked a bit suspicious with all those wires sticking out of it but there’s plenty of scope for fine tuning the hack to keep all the bits and pieces invisible.

How to stop it

Well, if you bought your Echo in 2017 or later, then you’re automatically immune to this one.

For everyone else, well the simple solution is not to let anyone set to work on your Echo with a blowtorch and a pair of pliers, so to speak.

Of course, the tampering could be done before you’ve even bought the thing, so make sure you buy direct from Amazon.

Finally, for the full belt and braces effect, hit the Mute button on top of the Echo if you’re saying anything that you really don’t want to be heard. According to Barnes, there’s no way disable that with software.

The connected home hack: ‘Alexa, unlock the front door’

You can set Echo up as the centre of your smart home array. It’s easy to do and we certainly wouldn’t advise anyone against it. It is worth bearing in mind, though, that Alexa will talk to anyone.

Amazon’s virtual assistant doesn’t come with any kind of voice recognition authentication constraints. So, in theory, if you put Echo within earshot of the outside world, then a stranger standing near your windows, or your front or back door, could start making requests of Alexa. So, they could turn you lights off and on, tamper with your heating or, even, possibly, unlock your doors.

Now, here’s the massive BUT. Smart locks which are Echo-enabled usually come with a second layer of security.

So, for example, the August Smart Lock Pro comes with the requirement that you set up a four-digit PIN that you need to say at the same time as the unlock command, and that should be enough to keep things safe.

Others require that your mobile phone is within Bluetooth range of the lock and the Yale model doesn’t allow unlocking by Echo at all. So, short of someone lurking in the bushes by your front door listening out for your PIN, you’re pretty safe here.

How to stop it

No need to get too caught up in positioning your Echo away from doors and windows because, really, if a burglar wanted to speak to your Alexa, they could.

All it would take would be a good shout through your letterbox. Instead, the simple quick fix is to not remove that secondary level of protection from your smart lock, no matter how much quicker you think getting in your front door might be. Either that or don’t connect your smart lock to your Echo at all.

The chassis hack: Wolf in Alexa’s clothing

It looks like an Echo, it sounds like an Echo but is it really an Echo? It would be possible for someone to design their own version of a voice assistant with entirely different and evil ends and then just whack it inside an Amazon Echo shell and sell it onto an unsuspecting punter.

It would be easy enough to record Alexa’s voice by asking a genuine Echo to repeat phrases for you but could you really record enough responses to keep the user from your ruse?

In real terms, you’d only need to keep up the game long enough to harvest account details or whatever else you wanted to pull.

If someone really wanted, of course, they could sit and write answers back in real time for the fake Alexa to mouth but that’s more than a full time job. Probably one for serious espionage only.

How to stop it

Buy your Echo from Amazon.

The DolphinAttack: ‘Did Flipper just say something?’

No, a pod of bottle-nosed mammals is not about to invade your kitchen. Instead, it’s their dastardly clever ultrasonic means of communication that’s getting aped here.

It turns out that Alexa – and, indeed, all machines that deal in voice recognition; anything with Siri, Google Assistant, etc – all of them can hear things that we can’t.

They’ve been designed to understand frequencies beyond the human ken which means that, if you can get hold of the right hardware, which only costs a couple of dollars, by the way, then you can ask Alexa and pals to do whatever you want without anyone hearing.

That could be disarming smart home security, ordering all sorts of goodies, phoning premium rate numbers and goodness knows what else. Clever stuff.

You can thank the genii over at Zhejiang University for that one.

How to stop it

Well, the good news is that ultrasonic frequencies don’t travel that well through walls and glass and such so you’d need to be within a few inches of Echo for the DolphinAttack to work.

What’s more, Alexa would repeat what’s said to her before performing the operation, so, even if someone has let you inside the smart home already, they’re probably going to hear what you’re up to soon enough.

Laser hack

Logic suggests that using sound would be the obvious way to hack into a voice-activated device but, in late 2019, researchers from the University of Electro-Communications in Tokyo and the University of Michigan discovered that they could activate a smart speaker’s microphone using a laser.

By varying the intensity of the light at a particular frequency, they found they could trick the speaker into converting it into an electrical signal, meaning they just needed line-of-sight to silently ‘speak’ to pretty much anything with a built-in microphone – an Echo, Google Home and Facebook’s Portal range.

Using various ‘light commands’ they managed to turn connected lights on and off, open garage doors, and make online purchases – and all from over 100 metres away.

How to stop it

Until somebody changes the way the microphones work, this is a vulnerability that won’t go away, but in the meantime there’s an easy solution: move your Echo away from any windows to make it harder for any baddies to shine a laser at it.

Voice history hack

This one’s an extension of ‘voice squatting’ that was discovered by cybersecurity firm Check Point Research in August 2020 (and has since been fixed by Amazon) but it’s worth being aware of what else a nefarious skill might be able to do.

CPR found that your entire voice history could be made available to a hacker with just one click of a fraudulent link that surreptitiously installed a rogue skill.

There’s not a huge amount of value in nicking hours and hours of recordings of you setting timers and turning your lights on and off, but the data could potentially be used to trick voice verification systems and even create audio deepfakes.

Again, there’s probably not a huge appetite in the hacker community for creating fake conversations involving random members of the public, but it’s good to know what’s possible.

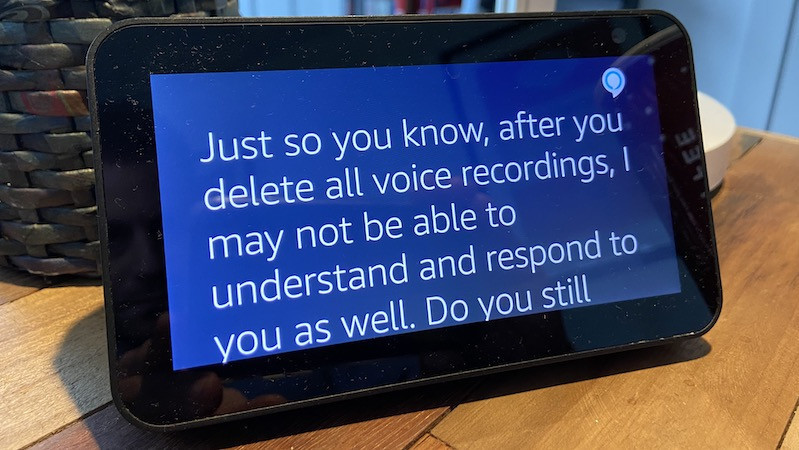

How to stop it

As mentioned above, Amazon has closed this loophole now and there was no evidence that it had ever been exploited, but if you’re concerned about how much of your voice history is being stored you can set it all to be deleted periodically.

First open the Alexa app on your phone and select the More menu in the bottom-right corner. Then tap Settings and choose Alexa Privacy. On the screen that pops up, pick Manage Your Alexa Data and you’ll be able to tell Amazon to delete all your voice commands as soon as your Echo has responded to them, save them for three months, or save them for 18 months.

By default they’ll all be saved until you decide to delete them. You can do this by just asking your Echo to delete everything you’ve said for a set period, or by going to the Review Voice History section of the Alexa Privacy menu, where you can break it right down to specific periods and exact dates.